Let’s take a step back. The better part of a decade, at this point. Within a month or two of being hired as a Systems Engineer, and some time before I truly came to grasp the environment I had walked into (of course, almost nothing was documented), our systems were hit with ransomware. As soon as I realized what it was, and that was rather quickly, I strongly suggested locking down the firewall except that was initially denied… because people in the field will be unable to do business. Excuse me? If you don’t act fast here, there may not be much of a business with business to do. Long story short, I made the call myself to the co-lo to lock down the firewall. It managed to hit at least the two main servers. We had no intention of paying maybe $30k+. I forget exactly. Could have been a lot more. Instead we quarantined the servers, wiped them, and reinstalled. We had backups, although they were file-based backups and several terabytes of data took probably close to half of a day to restore.

As we moved from pure crisis to the less chaotic remediation, I was able to speculate on more or less what happened. Keep in mind, I do not have a cybersecurity background, but that really was not needed here. I found that these Windows Servers were installed bare metal, and one was placed directly on the public internet, essentially for the people in the field to be able to connect in via RDP. Why there was not a VPN, or even /bare-minimum-although-still-bad-security/ only allowing TCP/3389 for RDP, I have no idea. That VPN was implemented quite quickly here. Additionally, each location had a single flat /24 subnet, and the point of attack here was no different. Actually, it had two, but that’s a whole other thing.

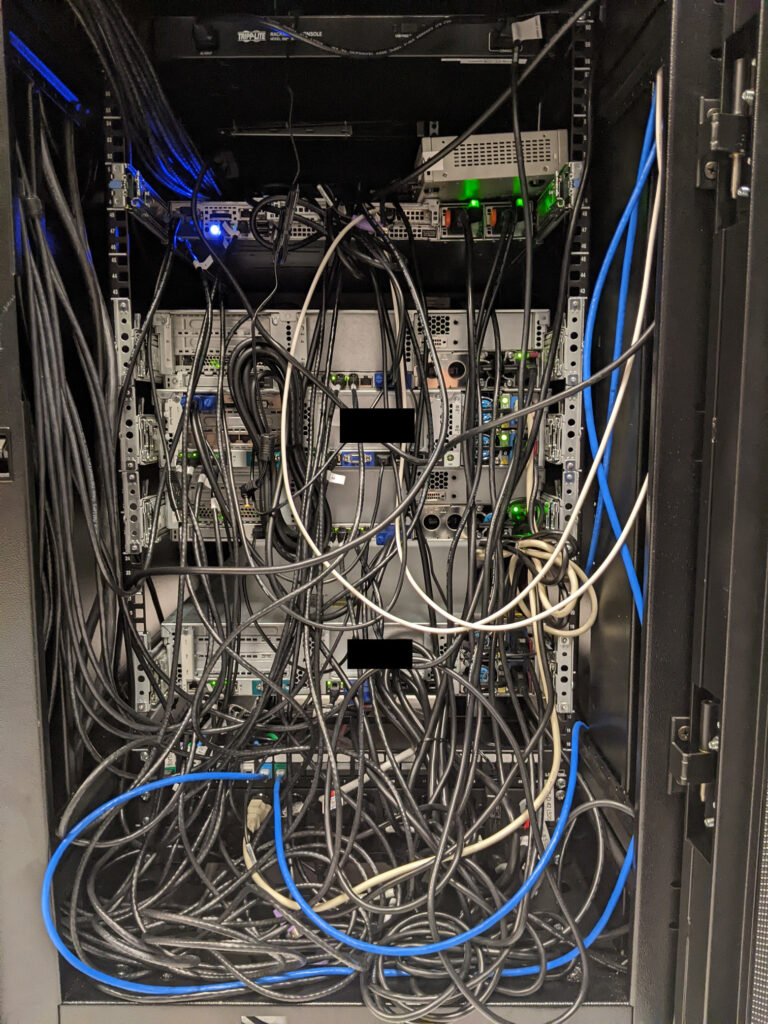

Fast forward about a year or two, the head of the IT department was let go from the company. It was a small team. Myself on the infrastructure side of things, and another mostly end-user help desk, desktop support, etc. side of things. These servers were several years beyond EOL, now also running standalone ESXi below the primary Windows Servers. Beyond EOL meant no bios updates without paying up, and the out of date bios meant ESXi would do stupid things all the time. The only way I could fix one of the recurring problems was to enter a higher privileged command prompt, and documentation about that was not easy to find.

Suffice it to say, it all really needed to be torn down and rebuilt with a bespoke plan. Some time before the IT head left, he had asked me to look into a server upgrade. Even then I knew that upgrading just one server would not be sufficient. Coincidentally, not long after that I got a sales call from Nutanix. That was certainly interesting, but was going to be far more costly than any one of us liked. I was looking at VMware Essentials licenses to bring the current environment where it really needed to be, but that was also passed on. It was during this time that I also started testing XCP-ng after watching Tom Lawrence’s videos.

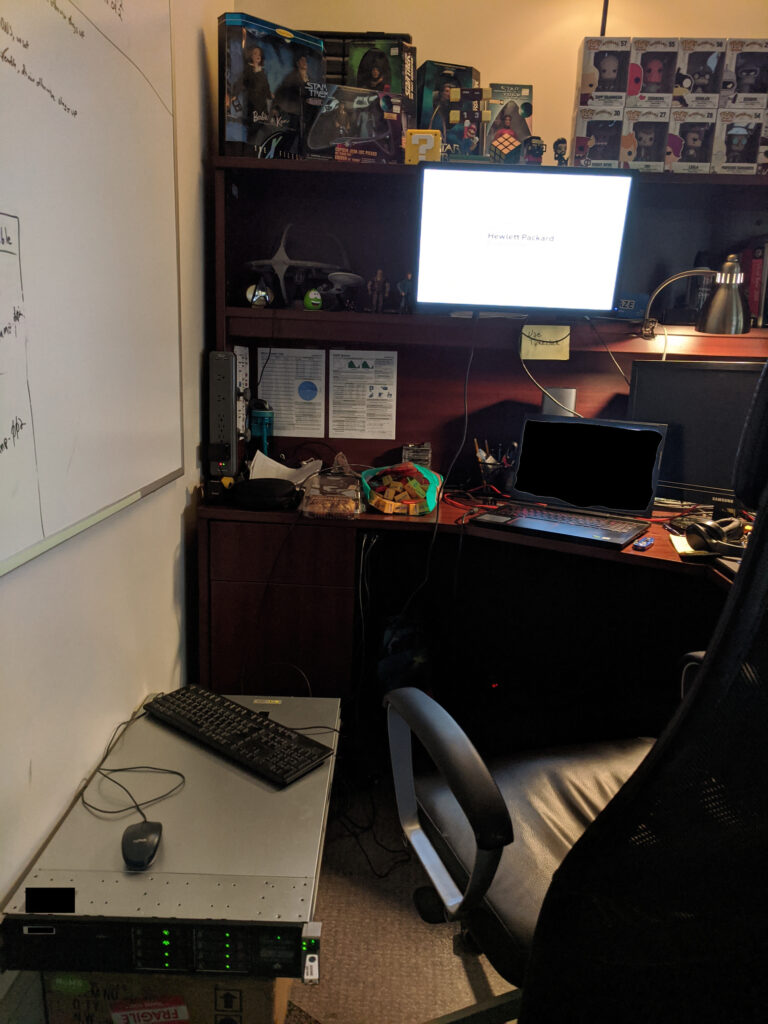

With the former IT head gone, I knew the responsibility was going to fall on me, even if I was not to be given his position and title. To be fair, I could have done nothing and allowed the servers to continue limping along, applying bandage after bandage until they finally fail. That was really all I could be held responsible for. However, I took it upon myself to shop around at different vendors and build a proposal to put in front of the CTO and other key people. My target budget was what I heard the former IT head mention was paid for the old servers. My testing of XCP-ng was going extremely well, so I chose that as the foundation for the compute nodes, and TrueNAS Core for storage. Minimizing the software license costs allowed much more of the budget to be applied to hardware. The proposal had three configurations for compute, three configurations for storage. It emphasized open source software, avoiding vendor lock, avoiding arbitrary software licenses. It was quite beautiful.

Of course, the proposal was all about how necessary it was and how it would help the company. Personally, my primary motivator was that it would lead to far fewer headaches once complete. That was really my primary motivator for a lot of what I did there. Both can be true at the same time.

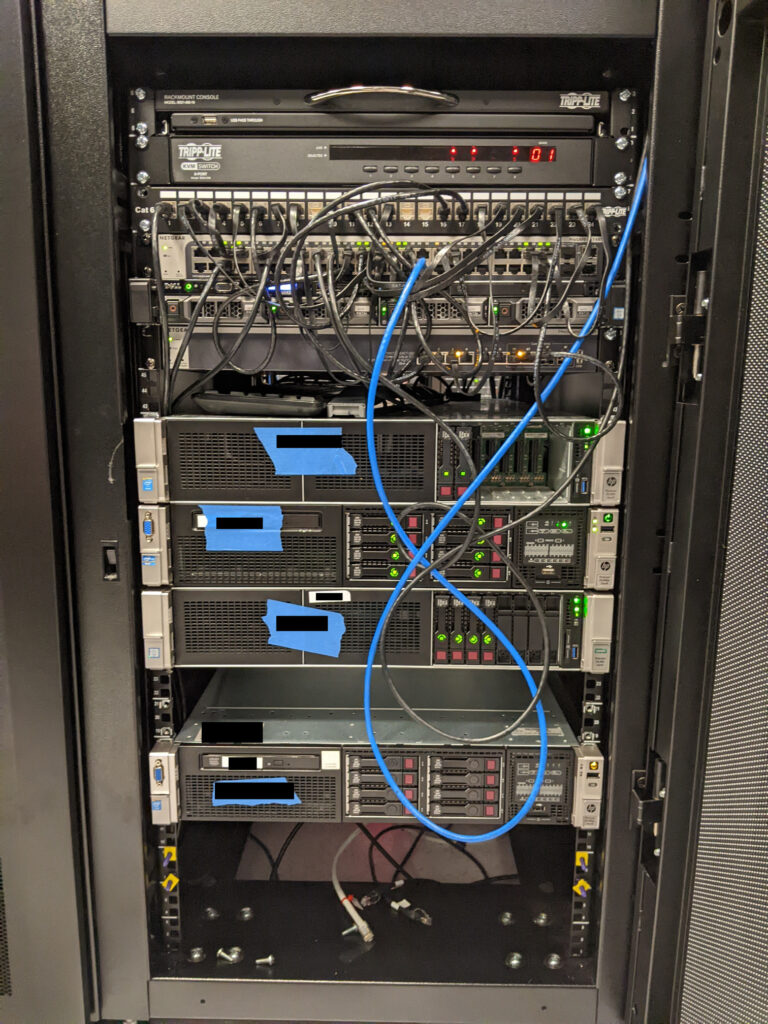

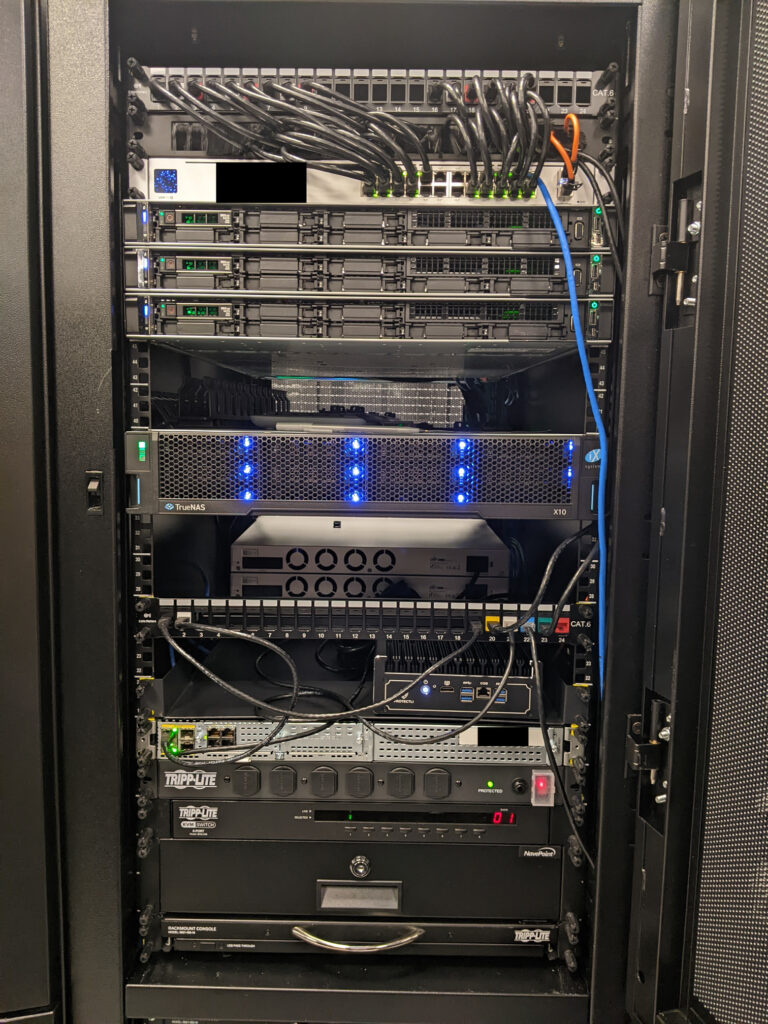

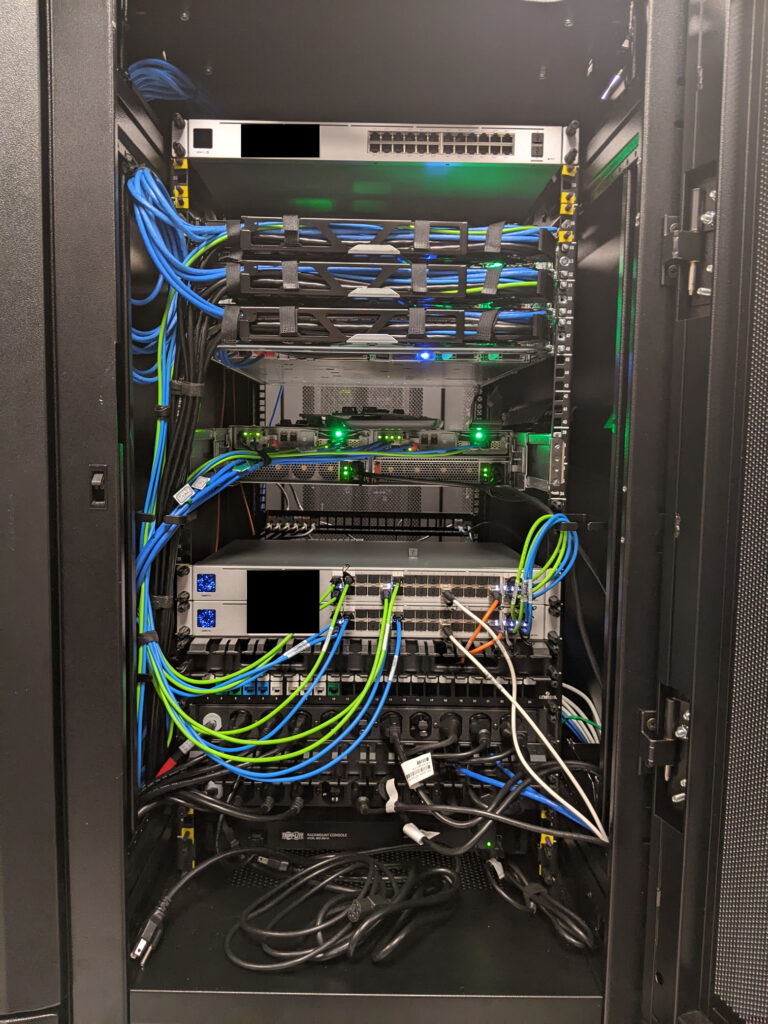

If I am not mistaken, they chose the top configurations for each. It ran circles around the old hardware in so many ways. 3x compute nodes, each with dual 20-core/40-thread CPUs, and a storage array with dual redundant controllers and 40+TB of spinning rust storage in RAID1+0. Network was nothing crazy, with a 24-port 1GbE access switch, and a 28-port 10GbE switch strictly for storage. The compute nodes had redundant SD cards for boot, where I installed XCP-ng, but did not have local storage (aside from a single unused 900GB drive, because they could not be ordered without at least one disk for whatever reason). Instead, they were tied to an iSCSI LUN on the TrueNAS array.

It was an absolute beast when completed. I was quite proud of that. Compared to a lot of businesses with presence in a data center, this probably was nothing all too impressive. However, I built that, top-to-bottom, where others might have a whole team of people to plan and implement over a long period of time. Yeah, I was quite proud of that. These photos were taken before it was finished, but that cable management is /nice/.

This all ties back to the ransomware attack. Fewer headaches meant tackling the problems surrounding the attack. Multiple compute nodes and redundant storage controllers meant greatly increased fault tolerance at the hardware level. Xen Orchestra snapshots and backups ensured far faster remediation in case of any potential future attack, especially since restoring from snapshot or backup is only a few clicks and mostly limited by the speed of disks and/or network. On the other hand, in the event of a full restore, the old way of file-based backups first required a fresh Windows Server install /before/ even starting the file restore. Live VM migrations between hosts was fast and painless. There was plenty of CPU overhead, such that all VMs could technically fit on a single host. That also meant that single host updates and reboots (or Rolling Pool Updates) were absolutely trivial. Presuming an attack is happening, /and/ you are lucky enough to catch it, just shut down the affected VMs. Possibly even shut down all VMs, if necessary. Then restore a snapshot or backup. It may be a good idea to disable networking on the VM in case the ransomware is also present there. Once reasonably certain it is clean, bring networking back up.

Additionally, to reduce attack surface, I put the XCP-ng hosts and TrueNAS array on a secured subnet that very little else had access. Then, keep logins and passwords secure. Bitwarden, in this case.

Off-site replication to Backblaze was also a must, meeting and exceeding a 3-2-1 backup strategy.

That vastly improved remediation provided an attack in progress, but later I would improve security with a Darktrace security appliance and KnowBe4 for security awareness training. Maybe I will write about them later.

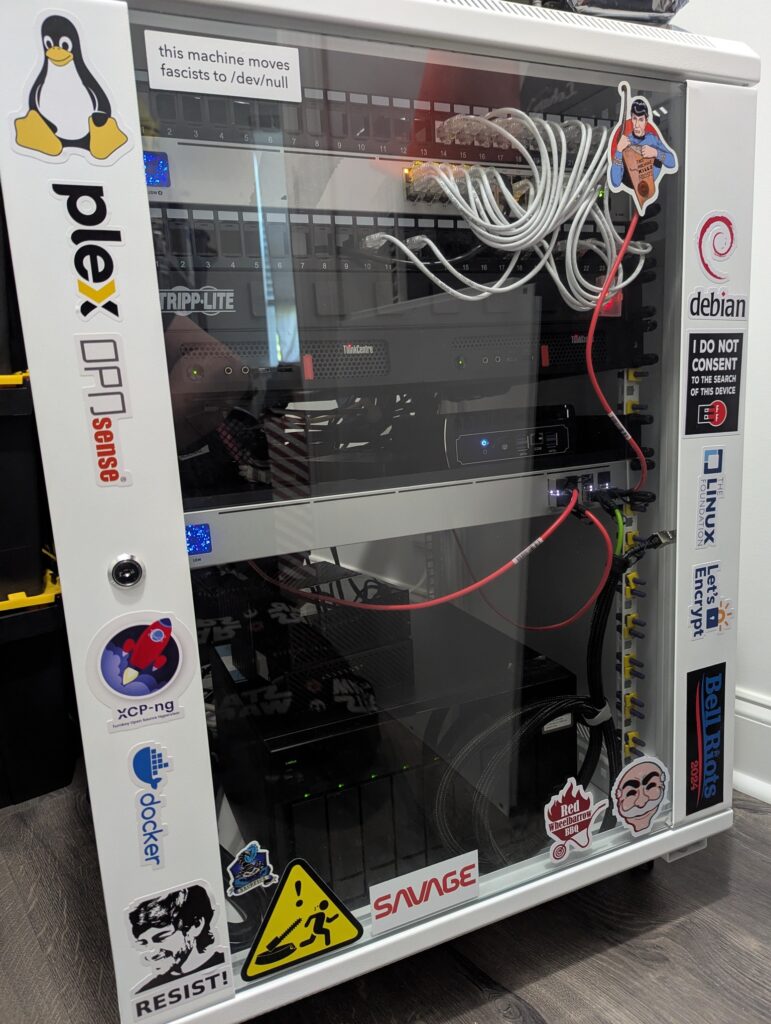

At home, my network and homelab were initially designed around lessons I learned from that, and also things I learned at home were later applied there, as well. Both there and home use XCP-ng, OPNsense, and Unifi.

“Plan for the worst, hope for the best.” – I most recently heard that on the fantastic series ‘The Pitt’, but it has been repeated in countless ways as long as people could repeat in countless ways. Sure, it’s about making everyone else’s jobs and/or lives easier, but at the end of the day don’t we all want to strive for fewer headaches?

I realize that this post is largely braggadocio, but I was having trouble getting started on writing anything else until I at least wrote this. At least there are some general lessons to take away here, yeah?

Leave a Reply